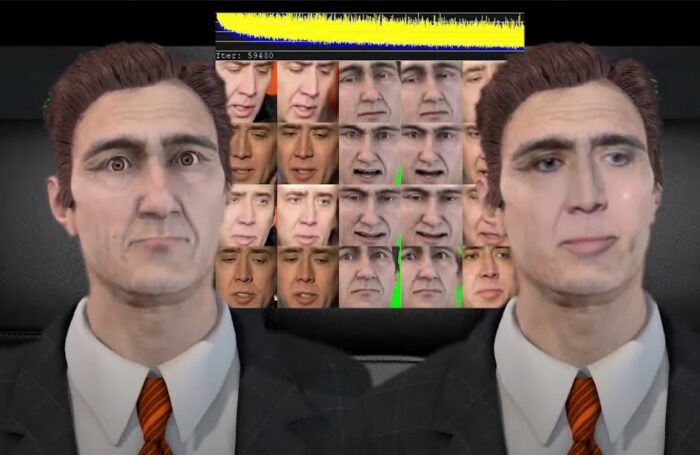

After watching some of the Corridor Crew‘s experiments with improving fx footage in classic tent pole movies I wondered if we could use Deepfakes to cross the uncanny valley with real time rendering of metahumans and computer generated avatars.

Presently face replacement algorithms still take a bit of processing power but one day soon they could be integrated onto GPU chips and available on tap.

This is experiment is deliberately very crude. The mocap is very janky and jerky, but even so the deep learning rendering creates a more relatable, believable lip sync and facial expression. I think it proves that it can improve the overall natural appearance. The limitation is that It relies upon a fairly large library of face samples. Nicholas Cage, comes default with the open source release.

I also tried it with my own face and this Santa Claus character. The software did an admirable job trying to match my own face over another face wearing glasses. The reflections on the lenses definitely confused it as I turned my head. Hilarious frames toward the end!

I’ll probably try this again whenever I upgrade to a faster GPU with One of Unreal’s metahumans. In the meantime I’m probably going to try to use Ebsynth to make the same cross by using my photoshop skills to create keyframes based on a styleGAN face.

I think the tools are coming together to combine real time animation with deep learning post production tools that can rival well financed big budget movies. Truly we live in an era when good storytelling is what is most needed, there are tools to realize those stories at just about every budget level now.